Capabilities Modelling

Capabilities Modelling is a way of describing an organisation's available capabilities throughout all of its functions. Often it is used to describe the entire organisation on a single page and at a very high level, yet structured in such a way as to be a meaningful tool for planning, debate and analysis. Capability in this context is a defined function or set of functions that the organisation uses or delivers that defines to itself and others the organisation at a high level.

Strawman Workshopping Example

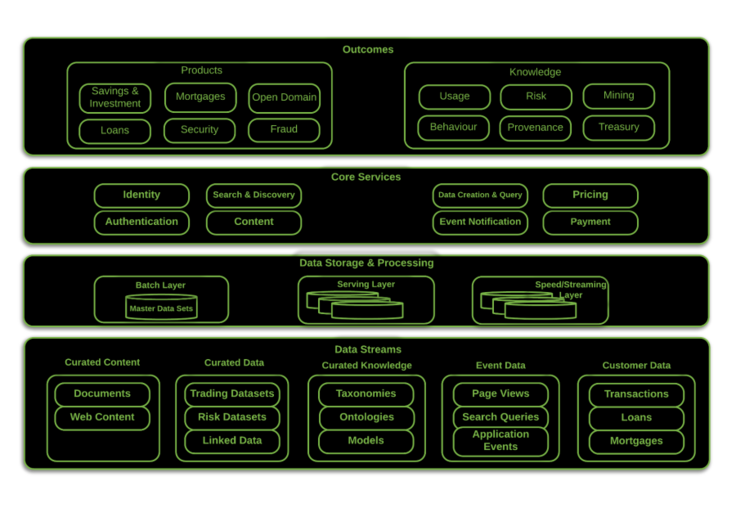

Four Capability Categories

There are four major categories of capability, within those categories are further sub categories depending on the context, and within those sub-categories are actual capabilities. Each of the four major categories will be detailed separately but in summary they are:

- Data Streams

- The collection of data sets or collected data around specific

data types or entities.

- Data Storage & Processing

- The various storage and processing capabilities the organisation

uses and understands.

- Core Services

- These are the capabilities available as services across the

organisation and shared amongst different delivery systems.

- Outcomes

- These are the capabilities that make use of all of the internal

capabilities already described in the other three categories.

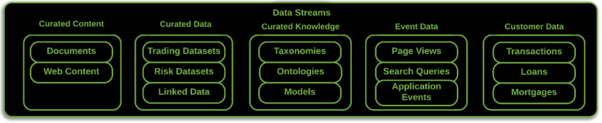

Data Streams

In this straw man there are five sub-categories of Data Streams (or if

preferred identifiable data sources).

Curated Content

This is content that the organisation has editorial control over. If it's a content company then it would include content on their web pages for whichever content types the organisation concerns itself in. In a more general sense it will include all of the informational pages and documents. It is also important to always remember it is not just capabilities that are delivered through Technology that form part of the Capability Model. An archived document produced on more manual systems is still a data set or piece of content, though it may not be very readily available.

Curated Data

This is data which the organisation has either produced itself or acquired, cleaned, aggregated and available to be used for further analytical processing either alone or with other data sets. It is the classic big data (regardless of size), capability of being able to prepare data sets, manage them, their provenance (expressed as knowledge later) and generate insights and knowledge.

Curated Knowledge

This is more about the structure of knowledge domains rather than the knowledge that's mined or generated. So this includes ontologies of domains, taxonomies, models, vocabularies. Gold sets of data for training are more an example of Curated Data

Event Data

This is all of the event data captured and processed or held by the organisation, it includes application events, user events, process events.

Customer Data

As well as the data provided by organisation customers, the transactions that have been made by them or for them it also includes the behavioural data captured or inferred whether its in a standard CRM sense or the use and behaviour on the organisation's website including any recommendations and their outcomes. The recognition of where and when data was captured about a customer is becoming increasingly important not simply for BI purposes but also in the case of individuals to be able to be compliant with increasingly rigorous personal data regulation.

Data Storage & Processing

This category is structured to describe a lambda architecture driving the processing of data in an organisation. It's a very opinionated view on processing data and many organisations wouldn't recognise all of the architecture as being either in use or appropriate.

It's worth keeping as an example though as all data architectures rely upon batch processing and any reasonable organisation will use a serving layer to deliver views of data to service and presentation layer applications. Many organisations though will not yet be using or contemplating a speed layer where batch views are improved or predicted in 'real time'.

It should also go without saying that the Data Storage & Processing category should reflect the organisation being modelled and not constrained to any particular flavour of architecture. The definitions below are from the same Wikipedia article.

Batch Layer

The batch layer precomputes results using a distributed processing system that can handle very large quantities of data. The batch layer aims at perfect accuracy by being able to process *all* available data when generating views. This means it can fix any errors by recomputing based on the complete data set, then updating existing views. Output is typically stored in a read-only database, with updates completely replacing existing precomputed views.

Serving Layer

Output from the batch and speed layers are stored in the serving layer, which responds to ad-hoc queries by returning precomputed views or building views from the processed data.

Speed/Streaming Layer

The speed layer processes data streams in real time and without the requirements of fix-ups or completeness. This layer sacrifices throughput as it aims to minimize latency by providing real-time views into the most recent data. Essentially, the speed layer is responsible for filling the "gap" caused by the batch layer's lag in providing views based on the most recent data. This layer's views may not be as accurate or complete as the ones eventually produced by the batch layer, but they are available almost immediately after data is received, and can be replaced when the batch layer's views for the same data become available.

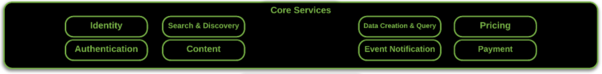

Core Services

There are a set of core services that are suitable for most organisations as a starting point.

In the straw man most if not all of the capabilities will be in any reasonably sized organisation that sells products and services and manages and processes data. Even if an organisation has no identity management and authentication for customers it undoubtedly will have for its own internal use, even if that simply uses embedded capabilities within vendors' operating systems and networks.

A capability doesn't have to be fully formed or complete to be mapped, indeed it is one way of exposing capabilities that need developing and improving.

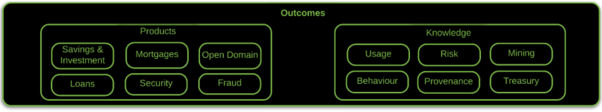

Outcomes

Outcomes are the visible useable capabilities of an organisation that uses the core services, data processing and data sets in combination either as a direct set of products and services to sell and distribute or as an internal set of products which themselves inform and improve the delivery of products and services to the customer.

Products

In Workshopping it should become apparent that the Products category is made up of not Product names but capabilities that can be separately identified. They could be considered as broad use cases or otherwise major features. The flexibility that this gives the organisation enables strategic planning around the capabilities that are core business and recognising capabilities that are either missing or incomplete.

Knowledge

The Knowledge category includes all of the capabilities around BI but also fundamental capabilities such as understanding the provenance of all data and the ways in which it is used. It could also include the results of Machine Learning and provide the knowledge sets (and knowledge graphs) used in customer facing products.

Exercise

The Exercise consists of: Splitting the members of the Workshop into groups means they can work separately on different kinds of Customer or Client.

- Each group chooses one Customer or Client with with which they're familiar.

- They should make a list of the capabilities that their IT and systems deliver for them.

- Then try and categorise them, use the example slide as needed but they are not constrained.

- Afterwards each group presents what they have found to the room.