Walls and Trust Nothing

At its simplest level the most obvious feature of the Walled Garden is the Wall. Walls have all sorts of connotations for us, especially at this moment in history, and they begin with protection by exclusion. Being surrounded by a wall gives us a feeling of security and control over who or what can gain access inside and control as to what and who leaves. In its original use for a real garden the wall was much more about protection, shelter and providing the right environment for the plants and crops. The surrounding wall warms in the sun and raises the ambient temperature for plants in the north of the garden, it provides shelter from prevailing and strong winds and it organises the land separating it for management.

Do the walls around our information systems share similar nurturing properties?

Yes. You can make a case that treating the Wall as an interface, or inversely interfaces as walls lets the code within those walls consume and provide services and information specific to those interfaces (or walls). So this takes the metaphor of the Wall to mean more than just an infrastructure and Enterprise defining concept. We'll get onto that soon.

But is this feeling of security really justified or is it actually a security blanket, merely comforting? If the wall is so impenetrable that nothing external can enter, even if invited by internal requests is that security, is that safer? Yes its safer, its how the world was prior to public internet and external services, each organisation, each installation of an organisation a locked down impenetrable lump.

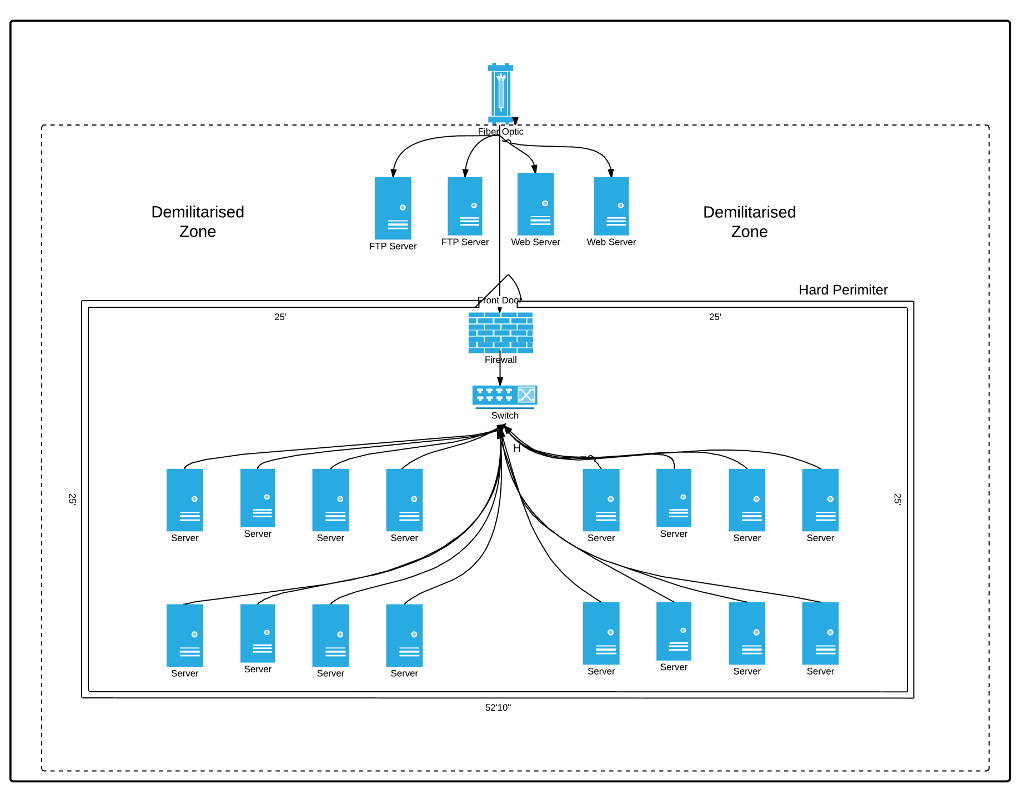

Well, except when it wasn't, when the area **outside** the wall had its own perimeter space, a DMZ, a demilitarised zone with a public interface (probably to some packet switching network). In the DMZ files came in, and files were dropped for external systems to pick up, probably using FTP. This was fine when there was little interconnectivity between organisations but even before the public internet there was a need and there was a whole set of protocols like EDI to exchange data (somewhat arthritically). And when the public internet and the Web did arrive the same model was reused, with Web servers sitting in the DMZ outside the trusted area. This is when trust started to leak into the hard perimeter. The web servers needed content and data and had to send any transactions into the internal systems,

Sure copies or abstracts of databases could be staged out in the DMZ but data still had to flow at some point and the means of security remained the same, once across the firewall most everything was addressable and if access was controlled it was by account and password. On the whole once in a network space every device was potentially accessible. The combination of web browser, web services and thin or no interfaces between service and data led to minor and magnificently catastrophic exploits. And still does,

Considerable effort and work was put into network architectures to try and separate applications, data and control, and that works at an infrastructure level, the virtual networks can be kept separate, but there is still the problem of identity and authorisation. Relying on IP and routing rules whether in firewalls, routers, switches or servers does not scale and that same problem is easily repeated in soft networking using cloud architectures.